The following is a partnership between Packt Publishing and me. The idea is for me to be a channel of valuable information made by independent publishers.

This disclaimer informs readers that the views, thoughts, and opinions expressed in the text belong solely to the author.

The Road to WebAssembly

Web development has had an interesting history, to say the least. Several (failed) attempts have been made to expand the platform to support different languages. Clunky solutions such as plugins failed to stand the test of time, and limiting a user to a single browser is a recipe for disaster.

WebAssembly was developed as an elegant solution to a problem that has existed since browsers were first able to execute code: If you want to develop for the web, you have to use JavaScript. Fortunately, using JavaScript doesn’t have the same negative connotations it had back in the early 2000s, but it continues to have certain limitations as a programming language. In this section, we’re going to discuss the technologies that led to WebAssembly to get a better grasp of why this new technology is needed.

The evolution of JavaScript

JavaScript was created by Brendan Eich in just 10 days back in 1995. Originally seen as a toy language by programmers, it was used primarily to make buttons flash or banners appear on a web page. The last decade has seen JavaScript evolve from a toy to a platform with profound capabilities and a massive following.

In 2008 heavy competition in the browser market resulted in the addition of just-in-time ( JIT ) compilers, which increased the execution speed of JavaScript by a factor of 10. Node.js debuted in 2009 and represented a paradigm shift in web development.

Ryan Dahl combined Google’s V8 JavaScript engine, an event loop, and a low-level I/O API to build a platform that allowed for the use of JavaScript across the server and client side. Node.js led to npm, a package manager that allowed for the development of libraries to be used within the Node.js ecosystem.

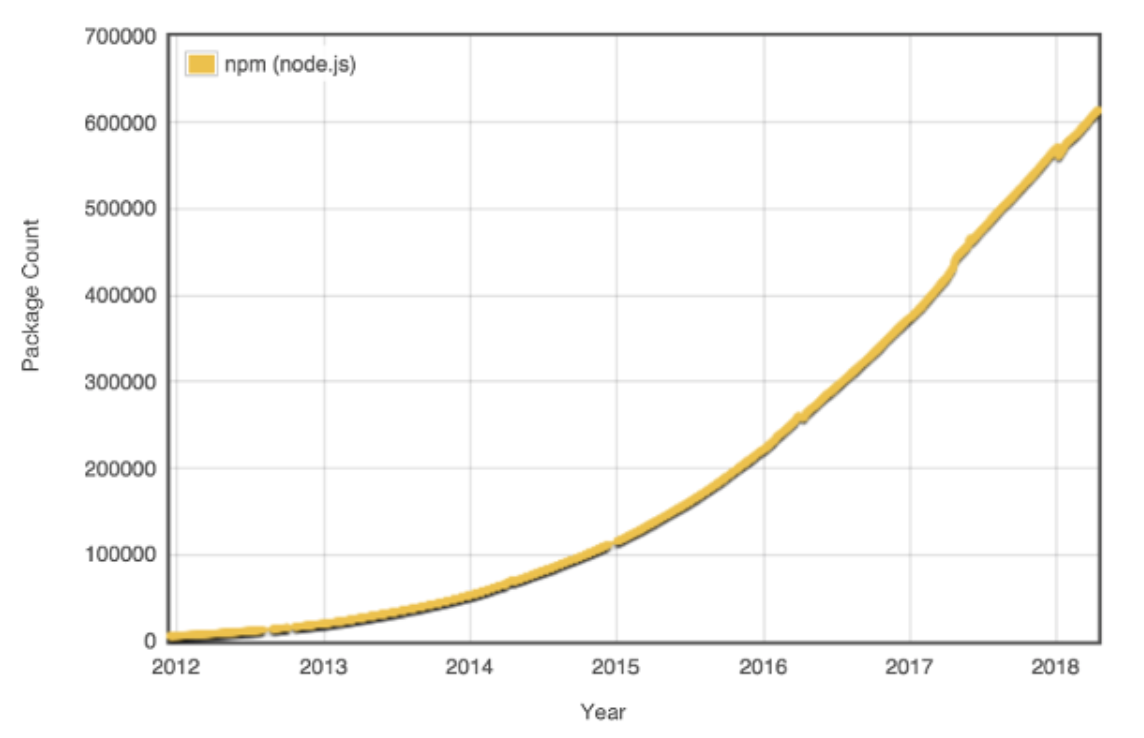

Figure 1: Package count growth on npm since 2012, taken from Modulecounts

It’s not just the Node.js ecosystem that is growing; JavaScript itself is being actively developed. The ECMA Technical Committee 39 ( TC39 ), which dictates the standards for JavaScript and oversees the addition of new language features, releases yearly updates to JavaScript with a community-driven proposal process.

Between its wealth of libraries and tooling, constant improvements to the language, and possessing one of the largest communities of programmers, JavaScript has become a force to be reckoned with.

But the language does have some shortcomings:

- Until recently, JavaScript only included 64-bit floating point numbers. This can cause issues with very large or very small numbers. BigInt, a new numeric primitive that can alleviate some of these issues, is in the the process of being added to the ECMAScript specification, but it may take some time until it’s fully supported in browsers.

- JavaScript is weakly typed, which adds to its flexibility, but can cause confusion and bugs. It essentially gives you enough rope to hang yourself.

- JavaScript isn’t as performant as compiled languages despite the best efforts of the browser vendors.

- If a developer wants to create a web application, they need to learn JavaScript—whether they like it or not.

To avoid having to write more than a few lines of JavaScript, some developers built transpilers to convert other languages to JavaScript. Transpilers (or source-to-source compilers) are types of compilers that convert source code in one programming language to equivalent source code in another programming language.

TypeScript, which is a popular tool for frontend JavaScript development, transpiles TypeScript to valid JavaScript targeted for browsers or Node.js. Pick any programming language and there’s a good chance that someone created a JavaScript transpiler for it. For example, if you prefer to write Python, you have about 15 different tools that you can use to generate JavaScript. In the end, though, it’s still JavaScript, so you’re still subject to the idiosyncrasies of the language.

As the web evolved into a valid platform for building and distributing applications, more and more complex and resource-intensive applications were created. In order to meet the demands of these applications, browser vendors began working on new technologies to integrate into their software without disrupting the normal course of web development. Google and Mozilla, creators of Chrome and Firefox, respectively, took two different paths to achieve this goal, culminating in the creation of WebAssembly.

Google and Native Client

Google developed Native Client ( NaCl ) with the intent to safely run native code within a web browser. The executable code would run in a sandbox and offered the performance advantages of native code execution. In the context of software development, a sandbox is an environment that prevents executable code from interacting with other parts of your system. It is intended to prevent the spread of malicious code and place restrictions on what the software can do.

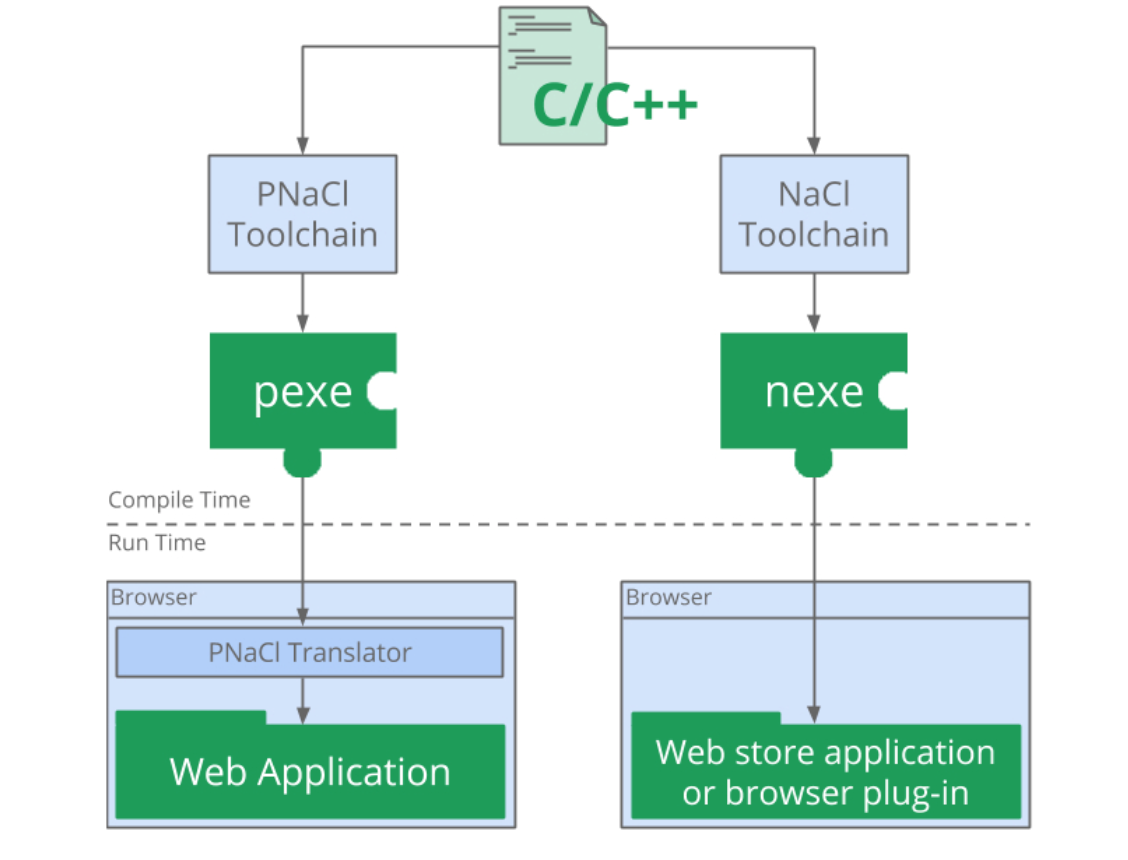

NaCl was tied to a specific architecture, while Portable Native Client ( PNaCl ) was an architecture-independent version of NaCl developed to run on any platform. The technology consisted of two elements:

- Toolchains which could transform C/C++ code to NaCl modules

- Runtime components which were components embedded in the browser that allowed execution of NaCl modules:

Figure 2: The Native Client toolchains and their outputs

NaCl’s architecture-specific executable (nexe) was limited to applications and extensions that were installed from Google’s Chrome Web Store, but PNaCl executables (pexe) can be freely distributed on the web and embedded in web applications.

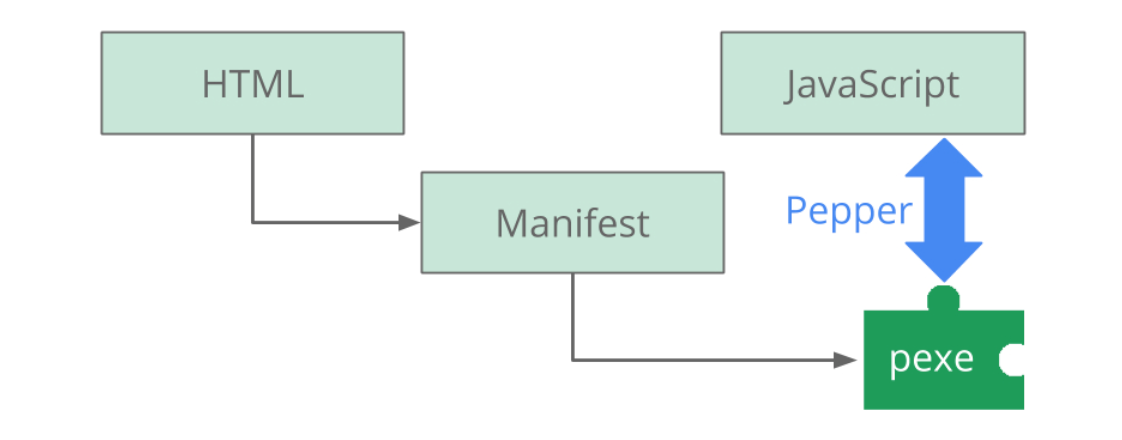

Portability was made possible with Pepper, an open source API for creating NaCl modules, and its corresponding plugin API (PPAPI). Pepper enabled communication between NaCl modules and the hosting browser, and allowed for access to system-level functions in a safe and portable way. Applications could be easily distributed by including a manifest file and a compiled module (pexe) with the corresponding HTML, CSS, and JavaScript:

Figure 3: Pepper’s role in a Native Client application

NaCl offered promising opportunities to overcome the performance limitations of the web, but it had some drawbacks. Although Chrome had built-in support for PNaCl executables and Pepper, other major browser did not. Detractors of the technology took issue with the black-box nature of the applications as well as the potential security risks and complexity.

Mozilla focused its efforts on improving the performance of JavaScript with asm.js. They wouldn’t add support for Pepper to Firefox due to the incompleteness of its API specification and limited documentation. In the end, NaCl was deprecated in May, 2017, in favor of WebAssembly.

Mozilla and asm.js

Mozilla debuted asm.js in 2013 and provided a way for developers to translate their C and C++ source code to JavaScript. The official specification for asm.js defines it as a strict subset of JavaScript that can be used as a low-level, efficient target language for compilers. It’s still valid JavaScript, but the language features are limited to those that are amenable to ahead-of-time ( AOT ) optimization.

AOT is a technique that the browser’s JavaScript engine uses to execute code more efficiently by compiling it down to native machine code. asm.js achieves these performance gains by having 100% type consistency and manual memory management.

Using a tool such as Emscripten, C/C++ code can be transpiled down to asm.js and easily distributed using the same means as normal JavaScript. Accessing the functions in an asm.js module requires linking , which involves calling its function to obtain an object with the module’s exports.

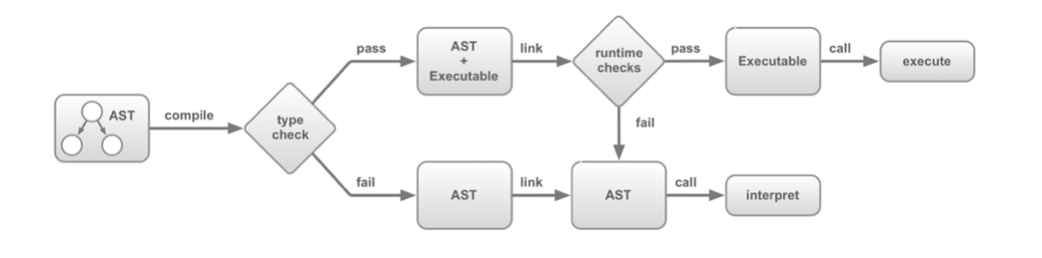

asm.js is incredibly flexible, however, certain interactions with the module can cause a loss of performance. For example, if an asm.js module is given access to a custom JavaScript function that fails dynamic or static validation, the code can’t take advantage of AOT and falls back to the interpreter:

Figure 4: The asm.js AOT compilation workflow

asm.js isn’t just a stepping stone. It forms the basis for WebAssembly’s Minimum Viable Product ( MVP ). The official WebAssembly site explicitly mentions asm.js in the section entitled WebAssembly High-Level Goals.

So why create WebAssembly when you could use asm.js? Aside from the potential performance loss, an asm.js module is a text file that must be transferred over the network before any compilation can take place. A WebAssembly module is in a binary format, which makes it much more efficient to transfer due to its smaller size.

WebAssembly modules use a promise-based approach to instantiation, which takes advantage of modern JavaScript and eliminates the need for any is this loadedyet? code.

WebAssembly is born

The World Wide Web Consortium ( W3C ), an international community built to develop web standards, formed the WebAssembly Working Group in April 2015, to standardize WebAssembly and oversee the specification and proposal process. Since then, the Core Specification and corresponding JavaScript API and Web API have been released.

The initial implementation of WebAssembly support in browsers was based on the feature set of asm.js. WebAssembly’s binary format and corresponding .wasm file combined facets of asm.js output with PNaCl’s concept of a distributed executable.

So how will WebAssembly succeed where NaCl failed? According to Dr. Axel Rauschmayer, there are three reasons detailed at http://2ality.com/2015/06/web-assembly.html#what-is-different-this-time:

First, this is a collaborative effort, no single company does it alone. At the moment, the following projects are involved: Firefox, Chromium, Edge and WebKit.Second, the interoperability with the web platform and JavaScript is excellent. Using WebAssembly code from JavaScript will be as simple as importing a module. Third, this is not about replacing JavaScript engines, it is more about adding a new feature to them. That greatly reduces the amount of work to implement WebAssembly and should help with getting the support of the web development community.

- Dr. Axel Rauschmayer

If this article piqued your interest about WebAssembly, you can check out Learn WebAssembly by Mike Rourke. Learn WebAssembly is both explanatory and practical, providing the essential theory and concepts for WebAssembly, and is a must-read for C/C++ programmers keen to leverage WebAssembly to build high-performance web applications.